The Counselor

video

Technology: Adobe After Effects, filmed with iPhone 8

A counseling engineer having a therapy session

for an AI home system and its human owner.

The Counselor makes public a counseling session for Reena and her AI home. The pair’s trouble of getting along would be a foreseeable common phenomenon that should rise along with the increasing AI implementations in daily lives. Despite having every building block of customizable convenience we want, AI would still fail to understand us adequately enough, as humans would always expect more from the perspective of having an emotional intelligent companion.

A counselor would travel through various social spaces, resolving the generated incompatibility issues between AI built systems and human beings. Inspired by the field of engineering psychology for improving human-machine relationships by redesigning interactions and environment, the video rethinks the fallback of a modular system, indicating questions: would there be a rising need for a role that intersects technology with psychological fields that works on the bonding between non-human intelligence and humanity? What really needs to be built in for an AI for us to get along with them? Would future engineers mostly be doing this service of engineering therapeutic sessions to provide a harmonious bond between us and AI?

Keywords: Emotional Intelligence, Emotional Engineering, Artificial Intelligence, Non-Human Counseling

critically and creatively explores an aspect of the future of work.” (Studio, MFA Media Design Practices, ArtCenter)

Before directly jumping into making anything fictional and futuristic, it is important to go back

and observe the current reality and from that point to a direction that is imagineable,

yet critical and speculative to challenge for alternative perspectives.

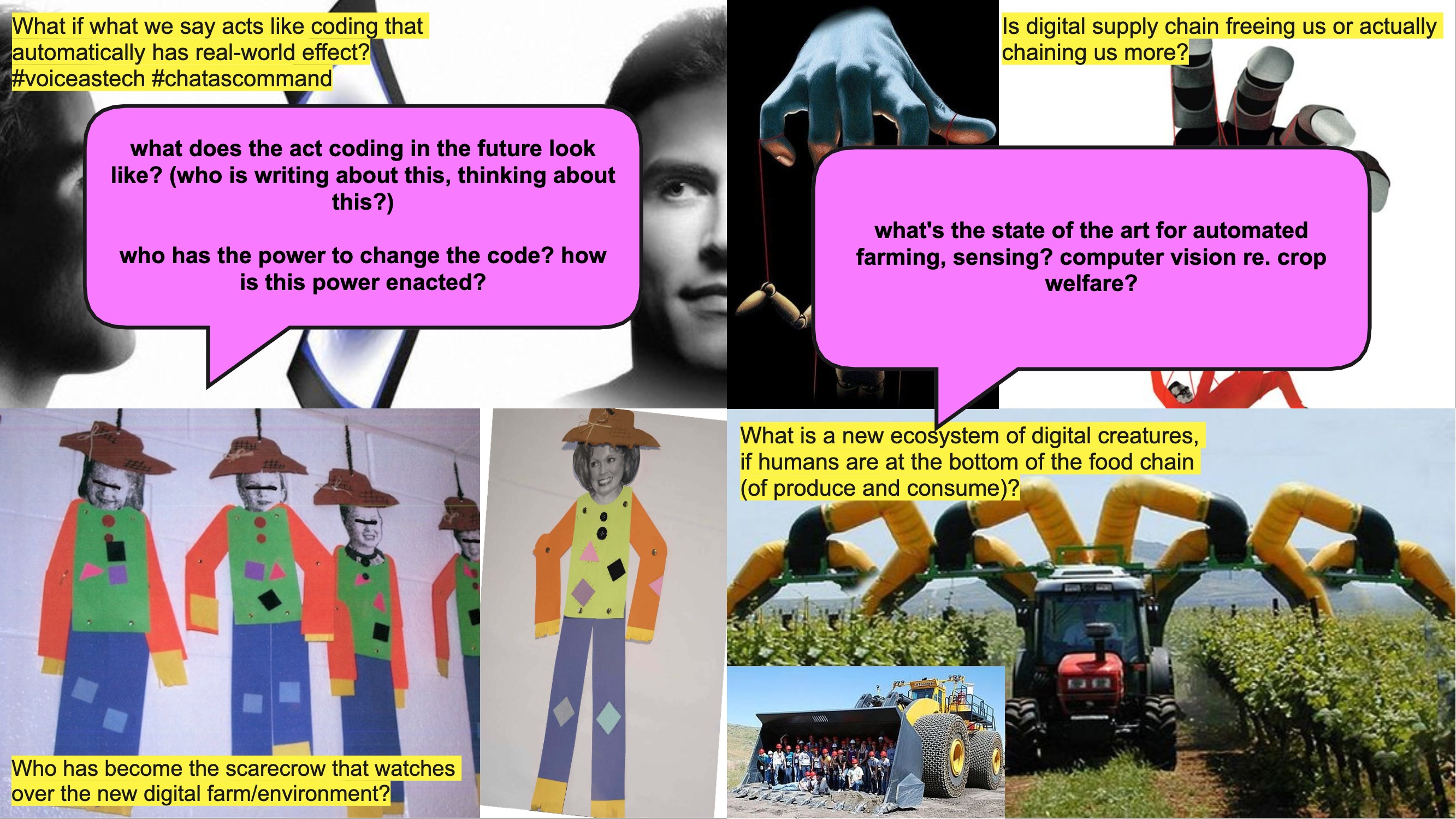

The project started with brainstorming exercises lasted about 1.5 months expanding on the topic of FUTURES OF WORK. Our studio people were first invited to think about its intersections with the concept of CODE AS AGENT, and a location of examination: the California Central Valley.

![]() (phrases associations with studio peers, MFA Media Design Practices, ArtCenter)

(phrases associations with studio peers, MFA Media Design Practices, ArtCenter)

The conversations led me to think about the relationship between code/programming language and societal terms/the languages we speak as human beings, the mapping of code-coded to signifier-signified and the coding in action.

How can we speak code?

![]()

![]()

![]()

What are the invisibles vs. visibles? Abilities vs. disabilities? Is there a universal language among all? What would be considered an accent when speaking code? Many more questions raised in my personal explorations.

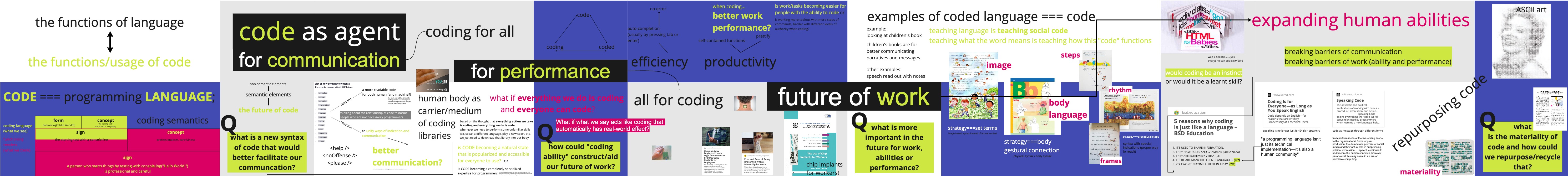

To focus more on the WORK aspect, the next exercise was for us to create a 30sec video that defamiliarizes something about work itself.

I took a jump from my previous interest in speaking code, and explored the idea of what work value is. What if WORK is about unmaking/deconstructing/breaking down things (for the ultimate goal of creating the new)? Where WORK from my speculation would not necessarily be a linear process. There was also this element of emotion involved in the process.

The mental dismantling process from this exercise brought up the idea of what could possibly be reused (after deconstruction). I researched into modular systems and how they are implemented in fields of making.

![]()

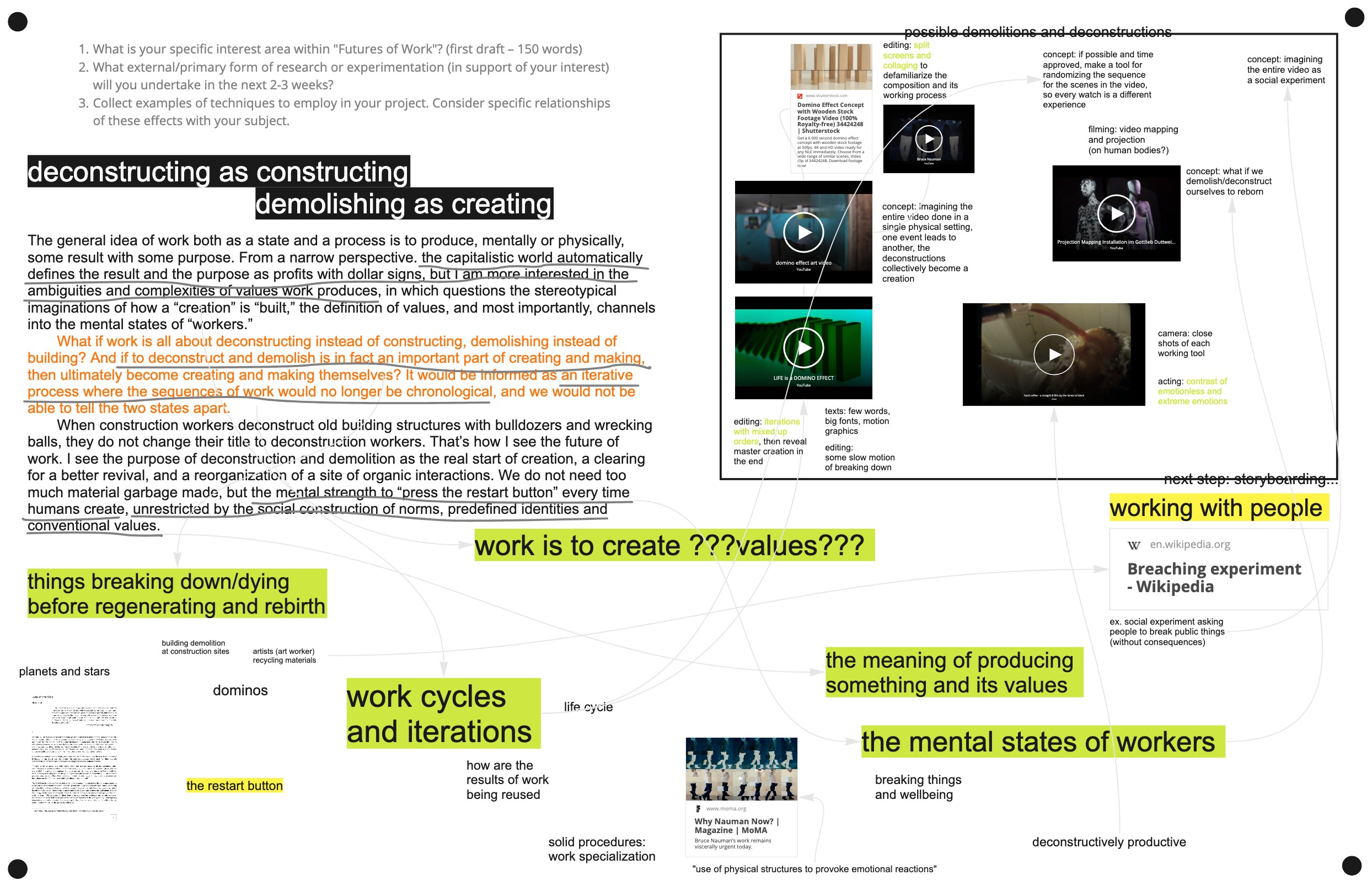

I was also imagining a magical cafe where people would come to the counter and make orders of how they would want their houses/talent workers/anything to be built (a + b + c)......having a professional emotional sprinkler to build modules and at last add this final sprinkle of emotions......my ideas were jumping in all directions, also researched about business models and reverse engineering art, and played with a box of toy bricks. Basically from feedback, I was asked:

Is there a kind of modular system/thinking in my making process?

How do I see it fits in the global economy?

What is smart making?

Connecting the dots expanded from the emotional building in modular processes, I came up with the inquiry of how, during those processes, the system would be maintained? I looked into AI modular systems and came up with this question:

AI finetuning = ? = Humans taking therapy?

It sort of went back to my original inquiry of how we could speak and communicate through code. In a sense, the modular thinking of what can be reused can be related to the act of combining functions and components in code. I found out about the field of engineering psychology and started to think about ways to remap that to intelligent systems.

If humans would need counseling and therapies, why wouldn’t AI and machines need them? As human beings are having issues with other human beings, why wouldn’t we expect there would be issues between human beings and machine intelligence that could take to a therapy session? Perhaps there could be sessions for when both parties are some sort of non-human intelligence. The emotional intelligence involved just gets more complicated.

Unsurprisingly, there are already many AI therapy services online for people to “comfortably” consult to. But there aren’t any therapists that are made for the AI systems themselves. Would there be such a role that deal with these issues in the futures of work? If so, how would these characters communicate? Perhaps in this video the counselor, the human, and the AI home system are already speaking in code with each other (and the dialogues have been translated to old human English for viewing purposes).

You can view the final video at the very top of this page.

A counselor would travel through various social spaces, resolving the generated incompatibility issues between AI built systems and human beings. Inspired by the field of engineering psychology for improving human-machine relationships by redesigning interactions and environment, the video rethinks the fallback of a modular system, indicating questions: would there be a rising need for a role that intersects technology with psychological fields that works on the bonding between non-human intelligence and humanity? What really needs to be built in for an AI for us to get along with them? Would future engineers mostly be doing this service of engineering therapeutic sessions to provide a harmonious bond between us and AI?

Keywords: Emotional Intelligence, Emotional Engineering, Artificial Intelligence, Non-Human Counseling

Prompt: Futures of Work

“Make a video vignette and accompanying written statement thatcritically and creatively explores an aspect of the future of work.” (Studio, MFA Media Design Practices, ArtCenter)

Before directly jumping into making anything fictional and futuristic, it is important to go back

and observe the current reality and from that point to a direction that is imagineable,

yet critical and speculative to challenge for alternative perspectives.

The project started with brainstorming exercises lasted about 1.5 months expanding on the topic of FUTURES OF WORK. Our studio people were first invited to think about its intersections with the concept of CODE AS AGENT, and a location of examination: the California Central Valley.

How can we speak code?

(note: pink word bubbles were responses from the studio instructors)

What are the invisibles vs. visibles? Abilities vs. disabilities? Is there a universal language among all? What would be considered an accent when speaking code? Many more questions raised in my personal explorations.

To focus more on the WORK aspect, the next exercise was for us to create a 30sec video that defamiliarizes something about work itself.

I took a jump from my previous interest in speaking code, and explored the idea of what work value is. What if WORK is about unmaking/deconstructing/breaking down things (for the ultimate goal of creating the new)? Where WORK from my speculation would not necessarily be a linear process. There was also this element of emotion involved in the process.

The mental dismantling process from this exercise brought up the idea of what could possibly be reused (after deconstruction). I researched into modular systems and how they are implemented in fields of making.

I was also imagining a magical cafe where people would come to the counter and make orders of how they would want their houses/talent workers/anything to be built (a + b + c)......having a professional emotional sprinkler to build modules and at last add this final sprinkle of emotions......my ideas were jumping in all directions, also researched about business models and reverse engineering art, and played with a box of toy bricks. Basically from feedback, I was asked:

Is there a kind of modular system/thinking in my making process?

How do I see it fits in the global economy?

What is smart making?

Connecting the dots expanded from the emotional building in modular processes, I came up with the inquiry of how, during those processes, the system would be maintained? I looked into AI modular systems and came up with this question:

AI finetuning = ? = Humans taking therapy?

It sort of went back to my original inquiry of how we could speak and communicate through code. In a sense, the modular thinking of what can be reused can be related to the act of combining functions and components in code. I found out about the field of engineering psychology and started to think about ways to remap that to intelligent systems.

If humans would need counseling and therapies, why wouldn’t AI and machines need them? As human beings are having issues with other human beings, why wouldn’t we expect there would be issues between human beings and machine intelligence that could take to a therapy session? Perhaps there could be sessions for when both parties are some sort of non-human intelligence. The emotional intelligence involved just gets more complicated.

Unsurprisingly, there are already many AI therapy services online for people to “comfortably” consult to. But there aren’t any therapists that are made for the AI systems themselves. Would there be such a role that deal with these issues in the futures of work? If so, how would these characters communicate? Perhaps in this video the counselor, the human, and the AI home system are already speaking in code with each other (and the dialogues have been translated to old human English for viewing purposes).

You can view the final video at the very top of this page.